Evaluation for perception systems

Object detection

In recent years, ml community has made huge progress in object detection through models such as Faster R_CNN, Mask R-CNN, YOLO among others. In this

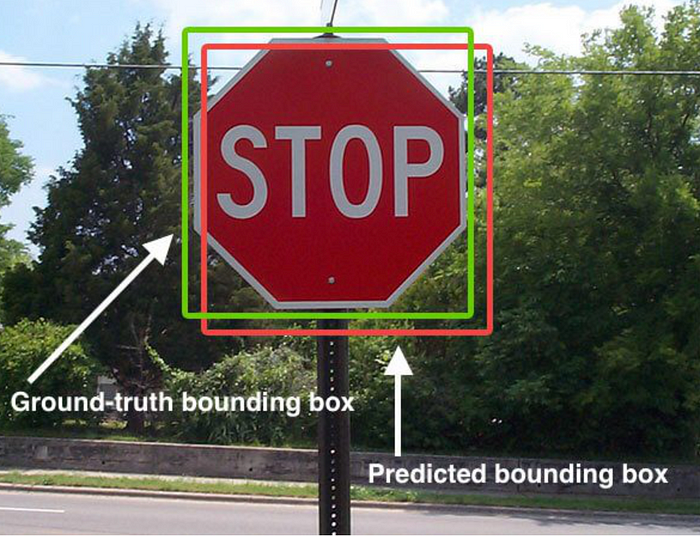

Let’s consider system outputs as predicted classes and bounding boxes.

- Intersection over Union (IOU) : IOU evaluates the overlap between ground-truth bounding box (gt) and the predicted bounding box (pred). It is calculated as the area of intersection between gt & pred divided by the area of the union of the two. This is widely used for human annotation jobs where a threshold IoU requirement is provided, to ensure that the annotations that are delivered have desired quality.

- Precision, Recall : Precision & Recall can be computed based on the predicted classes of objects and ground truth. If bounding boxes are also provided we can use a IOU threshold.

- Average Precision (AP) : The definition for the AP is the area under the precision-recall curve. AP is calculated individually for each class. This means that there are as many AP values as the number of classes. If one accepts more positives by lowering IOU threshold, the recall will increase but false positives may also increase, decreasing the precision score. For a good model, both precision and recall should remain high even if the confidence threshold varies.

- Average Precision @ IOU threshold (AP@x) : Average precision can be computed at several IOU thresholds such as 0.5, 0.75. These can be denoted by AP@50, AP@75 respectively. One can also create a metric where you take average of N such thresholds : [0.5, 0.75, 0.9, 0.95]

- Mean average precision (mAP) : mAP is computed by taking mean of AP for all classes. This can be used as a single metric to determine quality of object detection system.

Semantic segmentation :

Another very common task performed by perception/CV systems is Semantic segmentation. The primary goal is to predict class labels for each pixel in the image. It is used in self driving, content classification among others.

- Pixel Accuracy : Percent of pixels in the image which are correctly classified. The pixel accuracy is commonly reported for each class separately as well as globally across all classes.

- Intersection over Union (IOU) : It is the same as described in Object detection section above. It is used to quantify the percent overlap of pixels between the target image and prediction output.

- Dice Coefficient : Dice Coefficient is 2 * the Area of Overlap divided by the total number of pixels combined. The Dice coeff and IOU are positively correlated. In general, the IOU metric tends to penalize single instances of bad classification more than the F score quantitatively even when they can both agree that this one instance is bad. While comparing classifier A vs B, let’s say that the vast majority of the predictions are moderately better with A than B, but some of them of them are significantly worse using classifier A. It may be the case then that the Dice coeff favors classifier A while the IoU metric favors classifier B.

- Total misclassifications : If an image only has a single pixel of some detectable class, and the classifier detects that pixel and one other pixel, its Dice coefficient is a lowly 2/3 and the IoU is even worse at 1/2. Trivial mistakes like these can seriously dominate the average score taken over a set of images. A metric commonly used to mitigate this is total misclassifications (FN + FP).

Conclusion :

- We studied various metrics used in perception systems doing object detection & semantic segmentation.

- We studied some of the pitfalls of commonly used metric like IOU and Dice coefficient.

- The best way to evaluate classifier is to report a wide range of metrics and study the anomalies.